No one who is even vaguely familiar with the ongoing farce that is Brexit (which probably means everyone!) can be unaware of the extraordinary amount of propaganda that is peddled by both sides. Diametrically opposed facts are provided by politicians with completely straight faces, even though at least one of them knows that they are telling a considerable untruth.

Something similar happens in the IT world, especially when it comes to emerging technologies. The proponents of the new idea are quick to tell anyone who cares to listen that everyone is embracing the new technology and they’d be foolish to get left behind. Meanwhile, the companies who produce the ‘traditional’ technology which is under threat from the new one, tell the opposite tale – that no one is using the new-fangled, unproven, highly suspect, unstable product/software. The truth might lie somewhere in the middle.

Nothing astounding with this observation one might think. And no, there isn’t, but I just thought it was worth reminding one and all that anything presented as a fact needs to be carefully checked before it can be acknowledged as such.

There’s no doubting that, right now, the technology world is doing some amazing work, but quite how amazing it is, and quite how helpful it is to customers (as opposed to companies who simply wish to save costs) remains open to debate.

AI is a great example. Critics say it will never replace human thinking; supporters say it’s merely a question of time before the world’s human workforce is replaced by robots. And the truth lies somewhere between these two extremes.

And, of course, there may be a world of difference between what it is possible to achieve with AI, and what makes commercial (if not moral) sense.

No one will ever convince me that automated customer service will ever be as good as speaking to a competent human – the automated offering never covers all possible reasons why a customer might wish to make contact. However, chatbots and the like make perfect commercial sense if you are only interested in saving large amounts of money and not too bothered if you alienate a few customers.

So, moving forwards, all business owners have to beware the claims of both the new and old technologies, and also understand what will be the impact on the customer base of any changes in approach. Such decisions might just make solving Brexit appear like a minor problem in comparison!

NTT reveals lack of strategic ownership is stalling digital transformation plans.

According to NTT Ltd.’s 2019 Digital Means Business Report, only 11% of organizations are highly satisfied with those in charge of spearheading digital transformation, despite the fact that almost three-quarters of them are already underway on their journey.

Organizations worldwide are achieving some success with digital transformation, but there’s still a strong belief that this evolution requires radical, far-reaching changes to achieve success. This, when combined with a lack of strong transformational leadership and focus on the need to change people, is holding many companies back:

Wayne Speechly, VP of Advanced Competencies, NTT Ltd. said: “Organizations are still grappling with how to shape their business to capitalise on a connected future. Digital creates the opportunity for value to be constantly derived from transformation initiatives across the business. Organizations should focus less on perfecting a grand digital plan, and more on taking considered and iterative steps in their transformation journey to progress value and clarity of subsequent moves. For various reasons, an organization is its own worst enemy, so any change has to be supported by pragmatic, self-aware leadership who are themselves changing.”

Almost half of business leaders are failing to achieve a positive financial return from digital transformation projects they have executed despite considering themselves to be ‘digital thinkers’, reveals a new Censuswide survey.

The poll of 250 business leaders at public and private sector organisations with more than 1000 employees, commissioned by leading HR and payroll provider MHR, found that while 90 percent of business leaders have been responsible for commissioning one or more digital transformation projects only 54 percent believed they were financially benefitting the organisation.

This is despite 95 percent of business leaders perceiving themselves to be ‘digital thinkers’ and over four-fifths (84 percent) believing that they personally have the necessary digital skills required to oversee digital transformation projects in their organisation.

Michelle Shelton, Product Planning Director at MHR says; “The research highlights that while business leaders are confident in their own abilities to oversee digital change, the reality is that many projects are failing to deliver the financial benefits.

“One of the key drivers for implementing digital change is to deliver cost savings and revenue growth, but this is only achievable if people with the right skills, including a strong financial awareness, are spearheading the change.

“Ahead of carrying out a digital transformation project, it’s important to collaborate with all departments to create a joint strategy and establish a change team responsible for delivering the change.

“By adopting a collaborative approach organisations can leverage the skills and expertise of its people, and gain a true understanding of its current operation to establish a clear vision for the future.

“Digital transformation projects will almost certainly fail unless you take your people on the journey with you. Subsequently, any change team should naturally include HR. As stewards of company culture HR professionals can ensure any changes are successfully embedded and embraced by its people, and play an active role in helping create more a ‘digital savvy’ workforce by recruiting new talent to plug any skills gaps and arranging training for existing employees to support the adoption of new software.”

GoTo by LogMeIn has published the findings from a new global survey conducted by Ovum Research. The survey of 2,100 IT buyers and leaders found that communications and collaboration tools were “business critical” to the success of organisations, and investments in these tools need to be made a priority in order to support a growing remote workforce and the rise of digital natives in the office.

As businesses plan for 2020 and beyond, collaboration tools are a major focus for unified communications and collaboration (UCC) deployments, with 73% of survey respondents expecting spending to increase. However, it’s not just about finding a collaboration platform, it’s about finding one that meets the needs of a changing workforce that is seeing an increase in the number of employees working remotely, and digital natives entering the workforce as full-time employees. In fact, the survey found that 93% of respondents agreed that digital natives have different needs and expectations in the workplace, and over half of CIOs (56%) are looking to grow their collaborative software offering to meet that demand.

Digital Natives Need to Be Front and Center of Planning:

According to the survey, C-Suite IT leaders prioritised these items as steps they’re taking in anticipation of the growing digital native workforce:

IT Leaders Need to Provide for Today and Future Proof For What’s To Come:

IT leaders play a more strategic role than ever before. They need to consider whether or not to adopt new technology, and accommodate and support a diverse and dispersed workforce, all while keeping costs down and showing ROI for their decisions. For example:

AI Continues To Be Top of Mind:

AI capabilities are continuously improving in ways that help employees. In the coming year, more and more IT leaders will adopt AI technology for smarter, more efficient collaboration:

“Today’s CIOs and IT leaders need to play a more strategic role than ever before. They’ve got a new seat at the table and are expected to drive overall business strategy. The very nature of the way people work is changing and that change needs to be supported through great technology that is simple to use, easy to adopt and painless to manage,” said Mark Strassman, Senior Vice President and General Manager of UCC at LogMeIn. “IT leaders need to find technology partners that are meeting demands of the modern workforce. They need to support digital natives and remote employees to optimize today, modernize for tomorrow and set their employees and business up for long-term success.”

Aryaka has published its third annual 2019 State of the WAN report that reveals SD-WAN, cloud and application performance challenges, priorities and plans for 2019 and beyond.

When comparing this year’s results to the 2018 report, a pattern emerges: more respondents identified complexity, even surpassing performance, as the biggest challenge with their WAN. As applications and cloud connectivity become more complex, so do the networks required to support them. Organizations may recognize this, but don’t always have the expertise or resources to deliver on their digital transformation objectives.

“Our research on migration to SD-WAN concurs with Aryaka’s latest survey results regarding the complexities of managing the underlying WANs in enterprise networks,” said Erin Dunne, Director of Research Services at Vertical Systems Group. “We are seeing more enterprises choose managed SD-WAN solutions focused on providing dynamic WAN connectivity to ensure optimal end-to-end performance for all types of business-critical applications.”

Study Methodology

The third annual Global Aryaka 2019 State of the WAN study surveyed 795 global IT and network practitioners at companies across all verticals primarily headquartered in North America (57 percent), Europe (20 percent) and Asia—excluding China—(12 percent), and with up to 1,000 employees (31 percent), up to 10,000 employees (32 percent) and over 10,000 employees (24 percent). The survey asked respondents about their networking and performance challenges, priorities and their plans for 2019 and beyond.

What follows are a few of the key findings from this year’s report.

Cloud Models and New Applications Are Driving Digital Transformation

The majority of surveyed enterprises operate in highly distributed and complex IT environments. Over one-third have 20 or more branches around the globe. Half leverage five or more cloud providers or SaaS applications, and almost 15 percent have over 1000 applications deployed. These trends impact the enterprise’s ability to properly provision, optimize, troubleshoot, and secure their WAN and multi-cloud environments.

Network and Application Performance is Paramount

With lines of business moving at a much faster pace, the WAN needs to evolve to meet the needs of digital transformation. However, traditional architectures do not effectively enable a multi-cloud approach due to their misalignment with a cloud consumption model predicated on flexibility. This results in cost, complexity and performance challenges. 40 percent of those surveyed said cost was a challenge for them (7 percent higher than 2018). 35 percent said their challenge was around the high complexity, manageability and maintenance (14 percent higher than 2018). And 24 percent said they had concerns around slow access to cloud services and SaaS applications (a 3 percent decrease from 2018). With the limited visibility available, enterprises are split on the source of their application challenges, with 19 percent stating the branch, 23 percent the middle-mile, and 24 percent the application origin.

Unified Communications-as-a-Service (UCaaS) Challenges

One common complaint is UCaaS performance across traditional WANs due to latency and packet loss. Challenges include poor quality at 41 percent (an 11 percent drop from 2018), lag and delay at 31 percent (12 percent higher than 2018), and management at 28 percent (18 percent higher than 2018). Clearly, management is becoming a greater concern.

Innovation Drives a Better Experience

Traditional carriers and do-it-yourself (DIY) deployments are not equipped to handle the agility required for digital transformation. Solving slow application performance and managing vendors are the top time-sucks for IT organizations. 45 percent of respondents said slow application performance is leading to poor user experience in branch offices. 36 percent said slow application performance is leading to poor user experience for remote and mobile users (nine percent higher than 2018). And 28 percent said managing telcos or service providers is a nightmare (12 percent higher than 2018).

Managed SD-WAN is the Future

As organizations plan to the future, their top IT priorities are advanced security (34 percent), cloud migration (31 percent), IT automation (28 percent), and big data and analytics (28 percent). Yet traditional WAN and DIY SD-WAN solutions can’t always support these initiatives, and based on the growing number of respondents who have issues with managing their telcos, the situation is only getting worse. A fully managed global SD-WAN solution promises to provide flexibility, visibility, enhanced performance and the cost control required in a cloud-first era. And, the characteristics that enterprises look for in any SD-WAN solution closely track their overall IT priorities – 47 percent are looking for cloud and SaaS connectivity, 43 percent advanced security, 37 percent WAN optimization and application acceleration, and 34 percent are looking to replace their MPLS network.

“We are living in a complex multi-cloud and multi-SaaS application world. As global enterprises continue to innovate by embracing new technologies and migrating to the cloud, they also face new challenges,” said Shashi Kiran, CMO of Aryaka. “Whether it’s an increasing number of global sites through expansion, poor performing cloud-based applications, increasing costs or the time it takes to manage multiple vendors, many organizations are at an inflection point: transform the WAN now or risk falling behind and losing out to competitors.”

Wipro has released its 2019 State of Cybersecurity Report that highlights the rising importance of cybersecurity defense to global leaders, the emergence of the CISO as a C-Suite role, and an unprecedented focus on security as a pervasive part of the business operations.

The study found that one in five CISOs are now reporting directly to the CEO, 15% of organizations have a security budget of more than 10% of their overall IT budgets, 65% of organizations are tracking and reporting regulatory compliance, and 25% of organizations are carrying out security assessments in every build cycle. In addition, 39% of organizations now have a dedicated cyber insurance policy. All of these points showed dramatic increases from previous years.

The annual study is based on three months of primary and secondary research, including surveys of security leadership, operational analysts, and 211 global organizations across 27 countries.

Additional highlights from the report:

Yet organizations are aligning themselves to cyber-resilient strategies in new ways:

Raja Ukil, Global Head for Cybersecurity & Risk Services, Wipro Limited said, “With organizations riding the digital wave, security strategies need to be enhanced to address the changing landscape and enable a smooth and safe transition. Security is also evolving to be a pervasive part of core business operations, and countries are establishing active cyber defence strategies and functions to foster partnerships with the private sector enterprises and with other countries. Amidst growing threats, leaders are collaborating more than ever before in new and innovative ways to mitigate the risks.”

Respondents identify people as biggest source of cyber threats, with Facebook and BA as most notable breaches – but skills shortage has bolstered employment prospects.

A lack of resources is the single biggest challenge for the IT security market, followed by a lack of experience and skills, according to “The Security Profession in 2018/19” report from the Chartered Institute of Information Security (previously known as the IISP) – the independent not-for-profit organisation responsible for promoting professionalism and skills in the IT profession. At least 45 percent of respondents chose a lack of resources as the biggest challenge: compared to 37 percent for a lack of experience, and 31 percent for a lack of skills. Ultimately, security professionals feel their budgets are not giving them what they need – only 11 percent said security budgets were rising in line with, or ahead of, the cyber security threat level, while the majority (52 percent) said budgets were rising, but not fast enough.

Professionals were also clear about where threats originate. Overwhelmingly, 75 percent perceived people are the biggest challenge they face in cyber security – with processes and technology near-equal on 12 and 13 percent respectively. This may explain the need for more resources even as budgets increase: people are a far more complex issue to deal with. Yet at the same time, there are signs of improvement. More than 60 percent of IT professionals say that the profession is getting better – or much better – at dealing with security incidents when they occur, with only 7 percent saying the profession is getting worse. Conversely, less than half (48 percent) of respondents felt the industry is getting better at defending systems from attack and protecting data, with 14 percent saying the profession is getting worse. This suggests an ongoing move in the industry – from focusing on prevention, to an all-encompassing approach to security.

“IT security is a constant war of attrition between security teams and attackers, and attackers have more luxury to innovate and try new approaches,” said Amanda Finch, CEO, Chartered Institute of Information Security. “As a result, the industry’s focus on dealing with breaches after they occur, rather than active prevention, isn’t a great surprise – the former is where IT teams have much more control. Yet in order to deal with breaches effectively, security teams still need the right resources and to increase those in line with the threat. Otherwise they will inevitably have to make compromises.”

Other relevant statistics from the research included:

The focus on a lack of resources, experience and skills suggests that IT security teams are feeling the effect of the IT skills shortage. Yet this is also an opportunity for individuals. The majority of IT security professionals surveyed believe this is a good time to join the profession – 86 percent say the industry will grow over the next three years and 13 percent say it will “boom”. There is also an opportunity, and need, for women in the industry – 89 percent of respondents identified as male, and 9 percent as female. More than 37 percent say they have better prospects than a year ago, and the factors attracting people to take security jobs are the same as then – remuneration, followed by scope for progression and variety of work. Insufficient money, or a lack of opportunity, also cause people to leave security positions – yet the top factor causing people to leave their jobs is bad or ineffectual management.

“In the middle of a skills shortage, organisations need to treat their workers carefully. Losing them through a lack of investment, through failing to help develop skills, or simple poor management, cannot be allowed,” continued Amanda Finch. “At the same time, they cannot simply hire anyone to fill the skills gap – bringing the wrong person into a role can be a greater risk than an empty seat. Instead, organisations must understand what roles they need to fill; what skills those roles demand; and what skills applicants have. Armed with this, businesses can fill roles and support workers throughout their careers with the development, opportunities and training they need. This doesn’t only mean developing technical skills, but the social, organisational and strategic skills that are essential to put security at the heart of the business.”

Link11, a leader in cloud-based anti-DDoS protection, has published its DDoS statistics for Q2 2019. The data shows that the quarter saw a massive 97% year-on-year increase in average attack bandwidth, up from 3.3Gbps in Q2 2018 to 6.6Gbps in Q2 2019.

These attacks are easily capable of overloading many companies’ broadband connections. There are several DDoS-for-hire services offering attacks between 10 and 100 Gbps for a modest fee. Currently, one DDoS provider is offering free DDoS attacks of up to 200 Mbps bandwidth for a duration of five minutes.

The maximum attack volumes seen by Link11 between April and June 2019 also increased by 25% year-on-year, to 195Gbps from 156Gbps in Q2 2018. In addition, 19 more high-volume attacks with bandwidths over 100 Gbps were registered in Q2 2019.

Rolf Gierhard, Vice President Marketing at Link11 said: "Too many companies still have the wrong idea when it comes to the threat posed by DDoS attacks. Our data shows that the gap between attack volumes, and the capability of corporate IT infrastructures to withstand them, is widening from quarter to quarter. Given the scale of the threat that organizations are facing, and the fact that the attacks are deliberately aimed at causing maximum disruption, it’s clear that businesses need to deploy advanced techniques to protect themselves against DDoS exploits."

Increasing complexity of attacks

Multi-vector attacks posed an additional threat in Q2 2019, with a significant increase in complex attack patterns. The proportion of multi-vector attacks grew from 45% in Q2 2018 to 63% in the second quarter of 2019. Attackers most frequently combined three vectors (47%), followed by two vectors (35%) and four vectors (15%). The maximum number of attack vectors seen was seven.

Further findings from Link11’s Q2 DDoS statistics include:

New research from Databarracks has revealed organisations are getting better at understanding what IT downtime costs their business.

Data taken from its recently released Data Health Check survey reveals less than one in five organisations (19 per cent) do not know how much IT downtime costs their business. This is down from over a third, (35%) In 2017.

Peter Groucutt, managing director of Databarracks, discusses these findings further:

“Evidence has historically suggested organisations struggle to consider costs, but we’re now seeing companies thinking much more broadly about the financial impact of IT-related downtime.”

Groucutt stresses having a complete picture of what IT downtime costs your organisation enables you to make better-informed decisions on issues relating to IT resilience, supplier management and continuity planning: “There are several types of indirect costs that need to be considered when estimating the financial impact of an outage to your organisation..

The obvious costs are well known to a business. They include staff wages, lost revenue or any costs tied to fixing an outage. It is important, however, to look beyond these for a more holistic view of the impact an outage will have financially. ‘Hidden’ or intangible costs, such as damage to reputation, can often outweigh the more obvious, immediate costs – further research from our Data Health Check study revealed reputational damage is the second biggest worry for organisations during a disaster, behind only revenue loss.

“The problem with these intangible costs is they aren’t easy to estimate, and because they often take time to materialise, they can be excluded from calculations. It will always be difficult to secure budget for IT resilience if you can’t show the board a clear picture of the impact downtime will have. Presenting a complete downtime cost immediately puts the cost of investment into context and will help IT departments make the improvements they need. It’s encouraging to see organisations thinking more holistically and factoring these previously ignored costs into their budgeting.”

Zerto has published the full findings of its sponsored IDC survey, Worldwide Business Resilience Readiness Thought Leadership Survey. The subsequent report revealed that 91% of respondents have experienced a tech-related disruption in the past two years, and yet 82% of respondents said data protection and recovery are important to their digital transformation projects.

The white paper illustrates a perception gap between IT and business decision makers regarding the importance of data availability and success of digital transformation/IT transformation initiatives.

Key indicators of perception gap, supported by research findings:

Optimizing resilience planning, the report says, plays an important role in minimizing the financial burden and negative impact of IT-related business disruption. These types of disruptions, the research details, are costing organizations significantly:

The white paper concludes that because most respondents have not optimized their IT resilience strategy, cloud and transformation initiatives are at risk of delay or failure.

However, 90% of respondents indicated intent to increase their IT resilience investments over the next two years.

For many organizations, efforts to improve resilience are taking place against a backdrop of changing data protection and disaster recovery needs:

Interestingly, almost 100% of respondents anticipate cloud playing a role in their organization's future disaster recovery or data protection plans. But today, according to respondents, integrated adoption of cloud-based protection solutions remains low:

Currently only 12.4% of IT budgets (on average) are spent on IT resilience hardware/software/cloud solutions.

Phil Goodwin, Research Director, IDC, commented:

“These survey results indicate that most respondents have not optimized their IT resilience strategy, evidenced by the high levels of IT and business-related disruptions. However, the majority of organizations surveyed will undertake a transformation, cloud, or modernization project within the next two years. This illustrates the need for all organizations to begin architecting a plan for IT resilience to ensure the success of these initiatives.”

He added, “Without such a plan, the high prevalence of disruptive events, unplanned downtime, and data loss indicated by respondents will continue to put cloud and transformation initiatives at risk of delay or failure — creating a financial burden and negative impact to an organization's competitive advantage.”

Avi Raichel, CIO, Zerto, added:

“The resilience of business IT is under constant pressure. Malicious attacks and outages are causing enormous levels of disruption, and it’s clear that for many organizations their ability to avoid and mitigate IT-related disruption is not where it needs to be, and is actually holding back their ability to focus on innovating. IT leaders and professionals clearly understand the pressing requirement for better resilience, and it’s to everyone’s benefit that the momentum behind IT resilience is really building.”

Demand for cloud computing continues to drive European data centre market.

Q2 2019 witnessed a record take-up of 57MW across the four largest colocation markets in Europe. The FLAP markets of Frankfurt, London, Amsterdam and Paris recorded 98MW at the half-year, 11MW above the previous H1 record according to research from CBRE, the world's leading real estate advisor.

CBRE analysis shows that Frankfurt recorded 44MW of take-up in the first half of the year and is set to beat London’s 2018 full-year total of 77MW, the current highest total for any individual market. Frankfurt’s take-up in H2 will be bolstered by pre-lets to some of the large new facilities set to launch in the market.

London’s subdued start to the year continued, with it seeing the lowest take-up of any of the FLAP markets in the first-half of 2019. CBRE attributes this to the hyperscale cloud companies having procured record capacity in London during 2018, with these companies now selling this capacity before they will need to acquire more.

As the demand for data centre capacity continues to grow, the constraints surrounding the availability of land and power in some areas is driving data centre developer-operators to create new sub-markets within the major FLAP cities. Concerns over space and power were a cause for the municipalities of Amsterdam and Haarlemmermeer to jointly put a temporary halt on the development of new data centres. This will not slow down the rate of growth in the Amsterdam market, but may drive developer-operators to new areas in the city.

Mitul Patel, Head of EMEA Data Centre Research at CBRE commented:

“There is no let-up to the extraordinary levels of activity in the European colocation sector. Take-up records are broken every quarter and hyperscale cloud companies continue to be the epicentre of this. As a consequence, winning hyperscale business is more competitive than ever and companies are competing on a number of criteria, including price. To develop its xScale hyperscale product Equinix has gained funding from Singapore’s Sovereign Wealth Fund, GIC.”

Fewer than a third of banks and telcos analyse applications before cloud migration.

CAST, a leader in Software Intelligence, has released its annual global cloud migration report. The report analyzes application modernization priorities in financial and telecommunications firms.

Findings show critical missteps mean cloud migrations are falling short of expectations in mature institutions, just 40% meeting targets for cost, resiliency and planned user benefits. Lack of pre-migration intelligence and fear of modernizing legacy mainframe applications are the main drivers for these shortcomings. Adoption of microservices as a modernization technique is also faltering from lack of financing.

While these legacy process institutions realise only third of their target benefits for cloud migration, cloud-native approaches are enabling FinTech firms to outperform traditional banks, achieving more than half their target benefits.

Fewer than 35% of technology leaders use freely-available analysis tools. There is a systematic failure to assess the underlying application readiness for cloud migration with Software Intelligence, a deep analysis of software architecture. IT leaders must ensure the right architectural model and compliance is in place to avoid increasing technical debt. Unchecked, this leads to more IT meltdowns such as TSB’s £330m re-platforming crisis in 2018, with customers paying the expensive price for these mistakes.

More than 50% of banks and telcos are effectively taking leaps of faith, not undertaking essential analysis-led evaluations to support and facilitate cloud migrations. Instead, half the CTOs surveyed use gut instinct and ad-hoc surveys with application owners as the primary basis of their decision to move applications to the cloud. IT leaders need to adopt an analysis-led approach over gut instinct to implement the right cloud migration strategy and realise all potential benefits of migrating to the cloud.

Greg Rivera, VP CAST Highlight at CAST, commented on the findings, “Pilots going into storms turn to their instruments. If you run headfirst into a cloud migration without objectively assessing your applications, you’re flying in the dark.

Even one small change to an application has a ‘butterfly effect’ on the rest of the code set, so a disruption as big as cloud migration has detrimental effects including IT outages and loss of business. Migration to the cloud is vital when digitally transforming a business. But, it needs to be done right if organizations want success instead of suffering.”

More than 40% of software leaders are yet to define a class based approach to application modernization. Heavily legacy process firms tend to rehost apps, while rehosting, or so-called ‘lift-and-shift’, benefits apps with up to three years before end of life. However, existing and continuously evolving apps should be re-platformed and restructured during cloud migration. To successfully complete migration first gather intelligence and actively assess applications objectively.

Armed with battle scars software leaders at banks and insurance firms are revisiting their initial ‘lift-and-shift’ approach to cloud migration plans. While FinTech firms outperform mature institutions on cloud-native apps, banks lead the way on cloud-ready applications with just fewer than 50% rewriting applications. A European Chief Digital Architect said, “Cloud migration is only really a problem if you’re moving workloads without changing the way they are shaped.”

New data from Synergy Research Group shows that 52 data center-oriented M&A deals closed in the first half of 2019, up 18% from the first half of 2018 and continuing a strong growth trend seen over the last four years. The number of deals closed in the first half exceeded the total amount closed in the whole of 2016. 2019 is in line to be another record year for data center M&A deal volume with eight more deals having closed since the beginning of July, 14 more that have been agreed upon with formal closure pending as well as the regular flow of M&A activity. In total, since the beginning of 2015 Synergy has now identified well over 300 closed deals with an aggregated value of over $65 billion. Acquisitions by public companies have accounted for 57% of the deal value, while private equity buyers have accounted for 53% of the deal volume.

In terms of deal value the story is a little different from deal count as the trend is skewed by a very small volume of huge multi-billion dollar acquisitions. Eleven such deals were closed during the 2017-2018 period, while 2019 has yet to see a multi-billion deal closure. Since 2015 the largest deals to be closed are the acquisition of DuPont Fabros by Digital Realty, the Equinix acquisition of Verizon’s data centers and the Equinix acquisition of Telecity. Over the 2015-2019 period, by far the largest investors have been Equinix and Digital Realty, the world’s two leading colocation providers. In aggregate they account for 36% of total deal value over the period. Other notable data center operators who have been serial acquirers include CyrusOne, Iron Mountain, Digital Bridge/DataBank, NTT and Carter Validus.

“Analysis of data center M&A activity helps to affirm some clear trends in the industry, not least of which is that enterprises increasingly do not want to own or operate their own data centers,” said John Dinsdale, a Chief Analyst at Synergy Research Group. “As enterprises either shift workloads to cloud providers or use colocation facilities to house their IT infrastructure, more and more data centers are being put up for sale. This in turn is driving change in the colocation market, with industry giants on a never-ending quest to grow their global footprint and a constant ebb and flow of ownership among small local players. We expect to see a lot more data center M&A over the next five years.”

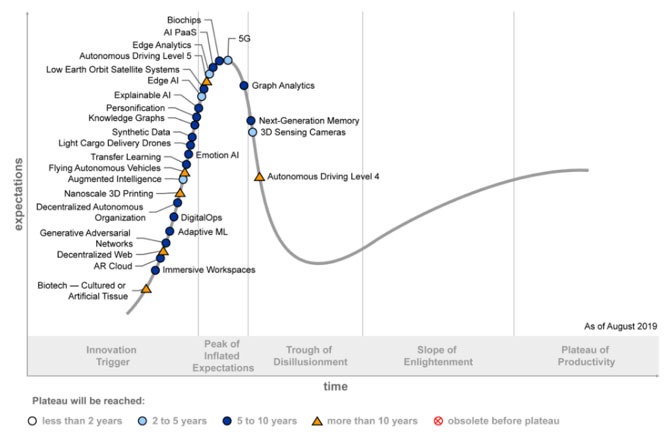

The 29 must-watch technologies on the Gartner Inc. Hype Cycle for Emerging Technologies, 2019 revealed five distinct emerging technology trends that create and enable new experiences, leveraging artificial intelligence (AI) and other constructs to enable organizations to take advantage of emerging digital ecosystems.

“Technology innovation has become the key to competitive differentiation. The pace of change in technology continues to accelerate as breakthrough technologies are continually challenging even the most innovative business and technology decision makers to keep up,” said Brian Burke, research vice president at Gartner. “Technology innovation leaders should use the innovation profiles highlighted in the Hype Cycle to assess the potential business opportunities of emerging technologies.”

The Hype Cycle for Emerging Technologies is unique among most Gartner Hype Cycles because it garners insights from more than 2,000 technologies into a succinct set of 29 emerging technologies and trends. This Hype Cycle specifically focuses on the set of technologies that show promise in delivering a high degree of competitive advantage over the next five to 10 years (see Figure 1).

Figure 1. Hype Cycle for Emerging Technologies, 2019

Source: Gartner (August 2019)

Five Emerging Technology Trends

Sensing and Mobility

By combining sensor technologies with AI, machines are gaining a better understanding of the world around them, enabling mobility and manipulation of objects. Sensing technologies are a core component of the Internet of Things (IoT) and the vast amounts of data collected. Utilizing intelligence enables the ability to gain many types of insights that can be applied to many scenarios.

For example, over the next decade AR cloud will create a 3D map of the world, enabling new interaction models and in turn new business models that will monetize physical space.

Enterprises that are seeking leverage sensing and mobility capabilities should consider the following technologies: 3D-sensing cameras, AR cloud, light-cargo delivery drones, flying autonomous vehicles and autonomous driving Levels 4 and 5.

Augmented Human

Augmented human advances enable creation of cognitive and physical improvements as an integral part of the human body. An example of this is the ability to provide superhuman capabilities such as the creation of limb prosthetics with characteristics that can exceed the highest natural human performance.

Emerging technologies focused on extending humans includes biochips, personification, augmented intelligence, emotion AI, immersive workspaces and biotech (cultured or artificial tissue).

Postclassical Compute and Comms

For decades, classical core computing, communication and integration technologies have made significant advances largely through improvements in traditional architectures — faster CPUs, denser memory and increasing throughput as predicted by Moore’s Law. The next generations of these technologies adopt entirely new architectures. This category includes not only entirely new approaches, but also incremental improvements that have potentially dramatic impacts.

For example, low earth orbit (LEO) satellites can provide low latency internet connectivity globally. These constellations of small satellites will enable connectivity for the 48% of homes that are currently not connected, providing new opportunities for economic growth for unserved countries and regions. “With only a few satellites launched, the technology is still in its infancy, but over the next few years it has the potential for a dramatic social and commercial impact” said Mr. Burke.

Enterprises should evaluate technologies such as 5G, next-generation memory, LEO systems and nanoscale 3D printing.

Digital Ecosystems

Digital ecosystems leverage an interdependent group of actors (enterprises, people and things) sharing digital platforms to achieve a mutually beneficial purpose. Digitalization has facilitated the deconstruction of classical value chains, leading to stronger, more flexible and resilient webs of value delivery that are constantly morphing to create new improved products and services.

Critical technologies to be considered include: DigitalOps, knowledge graphs, synthetic data, decentralized web and decentralized autonomous organizations.

Advanced AI and Analytics

Advanced analytics comprises the autonomous or semiautonomous examination of data or content using sophisticated techniques and tools, typically beyond those of traditional business intelligence (BI).

“The adoption of edge AI is increasing for applications that are latency-sensitive (e.g., autonomous navigation), subject to network interruptions (e.g., remote monitoring, natural language processing [NLP], facial recognition) and/or are data-intensive (e.g., video analytics),” said Mr. Burke.

The technologies to track include adaptive machine learning (ML), edge AI, edge analytics, explainable AI, AI platform as a service (PaaS), transfer learning, generative adversarial networks and graph analytics.

This year, Gartner refocused the Hype Cycle for Emerging Technologies to shift toward introducing new technologies that have not been previously highlighted in past iterations of this Hype Cycle. While this necessitates retiring most of the technologies that were highlighted in the 2018 version, it does not mean that those technologies have ceased to be important.

5.8 billion enterprise and automotive IoT endpoints will be in use in 2020

Gartner, Inc. forecasts that the enterprise and automotive Internet of Things (IoT) market* will grow to 5.8 billion endpoints in 2020, a 21% increase from 2019. By the end of 2019, 4.8 billion endpoints are expected to be in use, up 21.5% from 2018.

Utilities will be the highest user of IoT endpoints, totaling 1.17 billion endpoints in 2019, and increasing 17% in 2020 to reach 1.37 billion endpoints. “Electricity smart metering, both residential and commercial will boost the adoption of IoT among utilities,” said Peter Middleton, senior research director at Gartner. “Physical security, where building intruder detection and indoor surveillance use cases will drive volume, will be the second largest user of IoT endpoints in 2020.”

Building automation, driven by connected lighting devices, will be the segment with the largest growth rate in 2020 (42%), followed by automotive and healthcare, which are forecast to grow 31% and 29% in 2020, respectively (see Table 1). In healthcare, chronic condition monitoring will drive the most IoT endpoints, while in automotive, cars with embedded IoT connectivity will be supplemented by a range of add-on devices to accomplish specific tasks, such as fleet management.

Table 1

IoT Endpoint Market by Segment, 2018-2020, Worldwide (Installed Base, Billions of Units)

| Segment | 2018 | 2019 | 2020 |

| Utilities | 0.98 | 1.17 | 1.37 |

| Government | 0.40 | 0.53 | 0.70 |

| Building Automation | 0.23 | 0.31 | 0.44 |

| Physical Security | 0.83 | 0.95 | 1.09 |

| Manufacturing & Natural Resources | 0.33 | 0.40 | 0.49 |

| Automotive | 0.27 | 0.36 | 0.47 |

| Healthcare Providers | 0.21 | 0.28 | 0.36 |

| Retail & Wholesale Trade | 0.29 | 0.36 | 0.44 |

| Information | 0.37 | 0.37 | 0.37 |

| Transportation | 0.06 | 0.07 | 0.08 |

| Total | 3.96 | 4.81 | 5.81 |

Source: Gartner (August 2019)

Top Use-Case Opportunities Vary by Region

Similar to 2019, residential electricity smart metering, which can be used for more accurate metering and billing in the home, will be the top use case for Greater China and Western Europe in 2020, and will represent 26% and 12% of total IoT endpoints, respectively. North America, in comparison, will see its highest IoT endpoint adoption in building intruder detection, such as door and window sensors, which will represent 8% of total IoT endpoints.

North America and Greater China Have the Biggest Market for Endpoint Electronics Revenue

In 2020, revenue from endpoint electronics will total $389 billion globally and will be concentrated over three regions: North America, Greater China and Western Europe. These three regions will represent 75% of the overall endpoint electronics revenue. North America will record $120 billion, Great China will achieve $91 billion and Western Europe will come in third totaling $82 billion in 2020.

In 2020, the two use cases that will produce the most endpoint electronics revenue will be consumer connected cars and networkable printing and photocopying, totaling $72 billion and $38 billion, respectively. Connected cars will retain a significant portion of the total endpoint electronics spending resulting from increasing electronics complexity and manufacturers implementing connectivity in a greater percentage of their vehicle production moving forward. While printers and photocopiers will contribute significant spending in 2020, the market will decline slowly and other use cases such as indoor surveillance will rise as governments focus on public safety.

“Overall, end users will need to prepare to address an environment where the business units will increasingly buy IoT-enabled assets without policies for support, data ownership or integration into existing business applications,” said Alfonso Velosa, research vice president at Gartner. This will require the CIO’s team to start developing a policy and architecture-based approach to support business units’ objectives, while protecting the organization from data threats.

“Product managers will need to deliver but also to clearly and loudly communicate their IoT-based business value to specific verticals and their business processes, if they are to succeed in this crowded arena,” concluded Mr. Velosa.

Worldwide 5G network infrastructure revenue to reach $4.2 billion in 2020

In 2020, worldwide 5G wireless network infrastructure revenue will reach $4.2 billion, an 89% increase from 2019 revenue of $2.2 billion, according to Gartner, Inc.

Additionally, Gartner forecasts that investments in 5G NR network infrastructure will account for 6% of the total wireless infrastructure revenue of communications service providers (CSPs) in 2019, and that this figure will reach 12% in 2020 (see Table 1).

“5G wireless network infrastructure revenue will nearly double between 2019 and 2020,” said Sylvain Fabre, senior research director at Gartner. “For 5G deployments in 2019, CSPs are using non-stand-alone technology. This enables them to introduce 5G services that run more quickly, as 5G New Radio (NR) equipment can be rolled out alongside existing 4G core network infrastructure.”

In 2020, CSPs will roll out stand-alone 5G technology, which will require 5G NR equipment and a 5G core network. This will lower costs for CSPs and improve performance for users.

Table 1: Wireless Infrastructure Revenue Forecast, Worldwide, 2018-2021 (Millions of Dollars)

| Segment | 2018 | 2019 | 2020 | 2021 |

| 5G 2G 3G LTE and 4G Small Cells Mobile Core | 612.9 1,503.1 5,578.4 20,454.7 4,785.6 4,599.0 | 2,211.4 697.5 3,694.0 19,322.4 5,378.4 4,621.0 | 4,176.0 406.5 2,464.3 18,278.2 5,858.1 4,787.3 | 6,805.6 285.2 1,558.0 16,352.7 6,473.1 5,009.5 |

| Total | 37,533.6 | 35,924.7 | 35,970.5 | 36,484.1 |

Due to rounding, figures may not add up precisely to the totals shown.

Source: Gartner (August 2019)

5G Rollout Will Accelerate Through 2020

5G services will launch in many major cities in 2019 and 2020. Services have already begun in the U.S., South Korea and some European countries, including Switzerland, Finland and the U.K. CSPs in Canada, France, Germany, Hong Kong, Spain, Sweden, Qatar and the United Arab Emirates have announced plans to accelerate 5G network building through 2020.

As a result, Gartner estimates that 7% of CSPs worldwide have already deployed 5G infrastructure in their networks.

CSPs Will Increasingly Aim 5G Services at Enterprises

Although consumers represent the main segment driving 5G development, CSPs will increasingly aim 5G services at enterprises. 5G networks are expected to expand the mobile ecosystem to cover new industries, such as the smart factory, autonomous transportation, remote healthcare, agriculture and retail sectors, as well as enable private networks for industrial users.

Equipment vendors view private networks for industrial users as a market segment with significant potential. “It’s still early days for the 5G private-network opportunity, but vendors, regulators and standards bodies have preparations in place,” said Mr. Fabre. Germany has set aside the 3.7GHz band for private networks, and Japan is reserving the 4.5GHz and 28GHz for the same. Ericsson aims to deliver solutions via CSPs in order to build private networks with high levels of reliability and performance and secure communications. Nokia has developed a portfolio to enable large industrial organizations to invest directly in their own private networks.

“National 5G coverage will not occur as quickly as with past generations of wireless infrastructure,” said Mr. Fabre. “To maintain average performance standards as 5G is built out, CSPs will need to undertake targeted strategic improvements to their 4G legacy layer, by upgrading 4G infrastructure around 5G areas of coverage. A less robust 4G legacy layer adjoining 5G cells could lead to real or perceived performance issues as users move from 5G to 4G/LTE Advanced Pro. This issue will be most pronounced from 2019 through 2021, a period when 5G coverage will be focused on hot spots and areas of high population density.

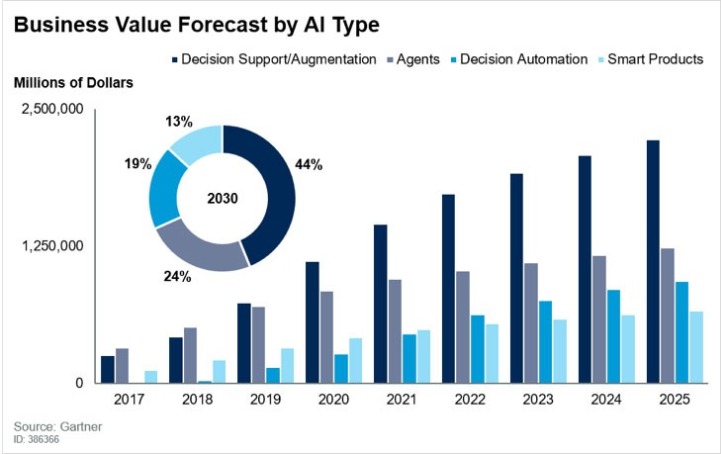

AI Augmentation to create $2.9 trillion of business value in 2021

In 2021, artificial intelligence (AI) augmentation will create $2.9 trillion of business value and 6.2 billion hours of worker productivity globally, according to Gartner, Inc.

Gartner defines augmented intelligence as a human-centered partnership model of people and AI working together to enhance cognitive performance. This includes learning, decision making and new experiences.

“Augmented intelligence is all about people taking advantage of AI,” said Svetlana Sicular, research vice president at Gartner. “As AI technology evolves, the combined human and AI capabilities that augmented intelligence allows will deliver the greatest benefits to enterprises.”

Business Value of Augmented Intelligence

Gartner’s AI business value forecast highlights decision support/augmentation as the largest type of AI by business value-add with the fewest early barriers to adoption (see Figure 1). By 2030, decision support/augmentation will surpass all other types of AI initiatives to account for 44% of the global AI-derived business value.

Figure 1: Worldwide Business Value by AI Type (Millions of Dollars)

Source: Gartner (August 2019)

Augmented Intelligence Enhances Customer Experience

Customer experience is the primary source of AI-derived business value, according to the Gartner AI business value forecast. Augmented intelligence reduces mistakes while delivering customer convenience and personalization at scale, democratizing what was previously available to the select few. “The goal is to be more efficient with automation, while complementing it with a human touch and common sense to manage the risks of decision automation,” said Ms. Sicular.

“The excitement about AI tools, services and algorithms misses a crucial point: The goal of AI should be to empower humans to be better, smarter and happier, not to create a ‘machine world’ for its own sake,” said Ms. Sicular. “Augmented intelligence is a design approach to winning with AI, and it assists machines and people alike to perform at their best.”

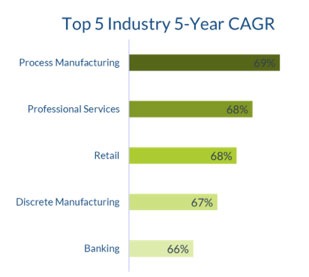

Despite a slowing global economy and the looming trade war between the United States and China, purchases of information and communications technology (ICT) are expected to maintain steady growth over the next five years. A new forecast from International Data Corporation (IDC) predicts worldwide ICT spending on hardware, software, services, and telecommunications will achieve a compound annual growth rate (CAGR) of 3.8% over the 2019-2023 forecast period, reaching $4.8 trillion in 2023.

"Global market conditions remain volatile, and although the economy has performed broadly better than expected in the past six months in many countries, a sense of uncertainty over the short-term economic and business outlook has been rising at the same time," said Serena Da Rold, program manager in IDC's Customer Insights and Analysis group. "Confidence indicators are fluctuating on a monthly basis, depending on short-term indicators ranging from speculation over tariffs and trade wars to political wild cards, with a potential global slowdown looming for 2019 and 2020. End-user surveys reflect the impact of this uncertainty on business decision-making, but our forecasts remain roughly stable overall for 2019 compared with our previous release, and slightly accelerated in the medium term, driven by stronger growth in software and hardware. Digital transformation and the adoption of automation technologies will be driving investments in applications, analytics, middleware, and data management software, as well as increasing demand for server and storage capacity."

Commercial purchases will account for nearly two thirds of all ICT spending by 2023, up from 60.4% in 2018 and growing at a solid five-year CAGR of 5.1%. Banking and discrete manufacturing will be the industries spending the most on ICT over the forecast period followed by professional services, which will also see the fastest growth in ICT spending, driven largely by service provider spending. Media and personal and consumer services will also grow nicely as these companies transform their businesses to offer new services and improve customer experience.

While purchases for planned upgrades and refresh cycles will continue to be the largest driver of commercial ICT spending, new investments in the technologies and services that enable the digital transformation (DX) of business models, products and services, and organizations will be a significant source of spending. IDC recently forecast worldwide DX spending to reach $1.18 trillion in 2019.

Consumer ICT spending will grow at a much slower rate (1.5% CAGR) resulting in a gradual loss of share over the five-year forecast period. Consumer spending will be dominated by purchases of mobile telecom services and devices (such as smartphones, notebooks, and tablets).

The United States will be the largest geographic market with ICT spending forecast to reach $1.66 trillion in 2023. Western Europe will be the second largest region with $927 billion in ICT spending in 2023, followed by China at $618 billion. China will also be the fastest growing region with a five-year CAGR of 6.1%.

"In the U.S., a favorable business climate and strong consumer confidence continues to buoy technology spending and innovative projects. Tech-intense areas such as the financial services sector and telecom industry are holding strong as they are committed to serving their demanding and evolving customers in new and innovative ways," said Jessica Goepfert, vice president in IDC's Customer Insights and Analysis group. "While the spending is more fragmented, consumer-facing industries like retail and personal and consumer services are also continuing to enjoy the benefits of healthy consumer confidence and higher wages and disposable incomes, and we see investments to develop and deliver an unforgettable customer experience and boosting customer loyalty. We continue to monitor the impact of the tariffs and trade wars on the manufacturing sector where are still bright spots, namely around projects that enable the efficient utilization of fixed assets while maximizing capacity utilization."

"Digital transformation is catching up in Asia/Pacific at an accelerated pace, and this will continue to drive significant investments in technologies in the next few years – from hardware and services to applications. The investments are driven by both government and enterprises in the region as they are understanding the value of what these new technologies bring to the overall operational activities. It also harnesses the potential of a lot of initiatives being launched to make the workforce well versed. Upskilling and future-proofing the workforce are on top of employers' and the governments' agenda," said Ashutosh Bisht, senior research manager with IDC's Customer Insights and Analysis group.

Worldwide spending on customer experience (CX) technologies will total $508 billion in 2019, an increase of 7.9% over 2018, according to the inaugural Worldwide Semiannual Customer Experience Spending Guide from International Data Corporation (IDC). As companies focus on meeting the expectations of customers and providing a differentiated customer experience, IDC expects CX spending to achieve a compound annual growth rate (CAGR) of 8.2% over the 2018-2022 forecast period, reaching $641 billion in 2022.

IDC defines customer experience (CX) as a functional activity encompassing business processes, strategies, technologies, and services that companies use, irrespective of industry, to provide a better experience for their customer and to differentiate themselves from their competitors. The term customer refers to individuals (B2C) as well as groups (B2B). IDC focuses only on business process and therefore does not include the customer's experience of the actual design of the product that the company sold to the customer, nor does it include aspects specific to the product or service such as the user interface or the product aesthetics.

"Customer experience has become a key differentiator for businesses worldwide. New innovation accelerator technologies like artificial intelligence and data analytics are at the forefront in driving the differentiation for businesses to succeed in their customer experience strategic initiatives," said Craig Simpson, research manager, Customer Insights & Analysis.

CX spending will be distributed somewhat evenly across the 16 use cases identified by IDC. In fact, the top six use cases will account for less than one third of overall spending this year. The CX use case that will see the most spending in 2019 and throughout the forecast is customer care and support followed by order fulfillment and interaction management. The use cases that will see the fastest spending growth over the five-year forecast period are AI-driven engagement, interaction management, and ubiquitous commerce.

The retail industry will spend the most on CX technologies in 2019 ($56.7 billion) and throughout the forecast. Digital marketing, AI-driven engagement, and order fulfillment will be the use cases that receive the most funding from retail organizations. Discrete manufacturing and banking will be the second and third largest industries in 2019. Customer care and support will be the primary use case for both industries. Retail and healthcare will be the two industries with the fastest spending growth over the forecast period with CAGRs of 13.1% and 11.5% respectively.

From a technology perspective, services will be the largest area of CX spending at $220 billion in 2019. Most of this total will be divided between business services and IT services. Software will be the second largest area of CX technology spending led by CRM applications and content applications. Hardware, including infrastructure and devices, will account for nearly 20% of overall CX spending while telecommunications services will be less than 10% of total spending.

The United States will be the largest geographic market for CX spending in 2019 led by the discrete manufacturing and retail industries. Western Europe will be the second largest region with banking and retail as the top industries. The third largest market will be China, led by healthcare and retail CX spending. China will also see the fastest growth in CX spending with a five-year CAGR of 13.6%.

International Data Corporation (IDC) completed its seventh annual survey examining the latest investment trends in the Internet of Things (IoT) as well as the opportunities and challenges facing IoT buyers worldwide. IDC found the majority of organizations that have deployed IoT projects have determined the KPIs to measure success – however, the specific KPIs differ significantly by industry.

Respondents of IDC's Global IoT Decision Maker Survey include IT and line of business decision makers (director and above) from 29 countries across six industries that have invested or plan to invest in IoT projects. Some key findings include:

An International Data Corporation (IDC) special report on developers, DevOps professionals, IT decision makers, and line of business executives found that developers have significant autonomy with respect to the selection of developer tools and technologies. In addition, developers exercise influence over enterprise purchasing decisions and should be viewed as key stakeholders in IT purchasing and procurement within any organization undergoing a movement to cloud accompanied by an internal digital transformation.

"The autonomy and influence enjoyed by developers today is illustrative of the changing role of developers in enterprise IT in an era of rapidly intensifying digital transformation," said Arnal Dayaratna, research director, Software Development at IDC. "Developers are increasingly regarded as visionaries and architects of digital transformation as opposed to executors of a pre-defined plan delivered by centralized IT leadership."

The study, based on a global survey of 2,500 developers, also found that the contemporary landscape of software development languages and frameworks remains highly fragmented, which creates a range of challenges for developer teams as well as potentially significant implications for the long-term support of applications built today. Given this environment, the languages that are likely to continue gaining traction among developers are those that support a variety of use cases and deployment environments, such as Python and Java, or exhibit specializations that differentiate them from other languages, as exemplified by JavaScript, along with readily available skills as staffing needs expand.

Other key findings from IDC's PaaSView survey include the following:

"Developer interest in DevOps reflects a broader interest in transparency and collaboration that illustrates the trend in software development to not only use open source technologies, but also to integrate open source practices into software development," said Al Gillen, group vice president, Software Development and Open Source at IDC. "Developers prioritize decentralized collaboration and code contributions as well as transparent documentation of the reasoning for code-related decisions."

European blockchain spending to grow to $4.9 billion by 2023

IDC's new Worldwide Semiannual Blockchain Spending Guide predicts continuous growth in blockchain spending across Europe, from over $800 million in 2019 to $4.9 billion in 2023, growing at a CAGR of 65.1% between 2018 and 2023. Despite a smaller CAGR compared with the average growth forecast for 2017–2022, knowledge about opportunities in blockchain is spreading from big enterprises to emerging start-ups looking to ensure secure and reliable management of money, personal data, and assets, which is proving to be necessary in a digitalized and data-driven market.

The perception of blockchain in the European market (and worldwide) is moving away from being only a cryptocurrency tool and a financial-only technology. Blockchain originated in the banking industry, and banking still accounts for 31% of total spending in 2019, with cross-border payments and trade finance the fastest-growing banking use cases. With blockchain well established in banking, other industries are increasingly stepping up and taking part in the digital transformation.

Manufacturing, professional services, retail, and banking show the greatest promise in their future investment in blockchain with above average CAGRs, hoping to improve transparency and assured authenticity in their businesses.

New use cases are emerging, driven by growing awareness of what blockchain is and what it can and cannot do. Blockchain enables companies to cut out the middleman, thereby saving costs and reducing risks of fraudulent behavior and human error. Identity management is a new use case on the rise in Europe, being implemented in insurance, banking, government, and personal and consumer services. With data emerging as one of the most valuable resources, it is becoming crucial for companies to have an effective and safe way to store, secure, and use consumers' personal data. Blockchain offers a decentralized and encrypted system to do this and it is now being used for a range of purposes, including i-voting (internet/electronic voting) and intellectual property management.

"Companies are beginning to view blockchain not only for its cryptographic means, but rather as a management tool that can keep track of items, information, and customer data. This is something that can be used by the transportation industry to track shipments, by top-quality luxury goods retailers to track provenance, or by real-estate professionals for transparent property management," said Carla La Croce, senior research analyst, Customer Insights and Analysis, IDC. "As spending continues to grow, the market will most likely adapt and security and validity will become a customer standard, with more companies turning to blockchain for a safe and reliable solution."

There are some challenges, however, according to Mohamed Hefny, program manager, Systems and Infrastructure Solutions, IDC. These include the lack of rules and regulations, and getting small producers of goods, like farmers and fishermen, to use the enterprise platforms. "However, the recognition from the European Commission of blockchain's importance to a single digital market and the work done by the big cloud blockchain service providers to create simple mobile apps for their smaller consortium members, and similar developments, are very promising," he said.

One of the key features of the managed services model which appeals to both end-user customers and MSPs themselves is the way services can be added to, augmented or very occasionally reduced. This portfolio approach to services has a lot to recommend it, with the technology being delivered, integrated and updated by the experts among the vendors and channels, but one of the side effects is that these services need not all be supplied by the same provider, and some players are only just realising this.

While there is concern among MSPs that this approach might dilute their relationship with the customer, at the same time, they acknowledge that they cannot be expert in every service they can deliver; hence the rise of the partner ecosystem.

This is especially noticeable in security, where the rise of the managed security service supplier (MSSP) has been noticeable in the last year. According to the Kaspersky IT Security Risks Survey 2019, 59% of organisations plan to use an MSP in the “near future” to help them reduce their security-related costs, while 43% of businesses value the “dedicated expertise” that comes with outsourcing their IT security support.

In other areas as well, expertise is in short supply. Internet of Things (IoT) is just at the stage where meaningful, repeatable and reliable solutions are becoming available for channels. As distributor Arrow’s EMEA IoT chief Paul Karrer says, “Data acquisition needs to be done before any analytics can start. And then the partners doing the data acquisition are not necessarily the ones to do the analytics.”

So there is a big opportunity for partners (and distributors and technology partners) to work together; Paul Karrer certainly sees more partners specialising and working as part of a team.

Also in the ecosystem there are network specialists, storage experts, even channels specialising in marketing and engaging with customers’ own data to talk to the outside world. These are all areas of expertise that require investment and commitment both to enter and then sustain with the expert level of knowledge required. It is no surprise that many MSPs are realising that they cannot do everything, and are looking to partner.

This is at the heart of the rising trend for ecosystems of managed service providers.

Acting as a brake, however is the tangle of responsibilities and contracts linked to service supply, including, but not limited to, Service Level Agreements (SLAs). Many of the current managed services deals have not been tested in adversity; the measurement of service issues and the implications of raised customer expectations are yet to be revealed in any scale.

The managed services industry will need to take these on board. The multiple layers of supply in the IT industry go beyond managed services, as many are now recognizing. As Tech Data’s European SVP Miriam Murphy told this year’s EMEA GTDC conference for major distributors in Lisbon, there needs to be wider changes in distribution contracts to reflect digitalisation and a new spread of responsibilities in the supply chain. Services are a key part of that.

Building the partner ecosystem around services and finding different sorts of partner will be one of the debating points as this year’s Managed Services Summits in London on September 18 and in Manchester on October 30.

The Managed Services & Hosting Summits are firmly established as the leading Managed Services event for the channel. Now in its ninth year, the London Managed Services & Hosting Summit 2019 on September 18 aims to provide insights into how managed services continues to grow and change as customer demands expand suppliers into a strategic advisory role, and as the ecosystems grow. The Managed Services Summit North, in Manchester will look at how regional expertise is developing in managed services, with local provision through a partner ecosystem.

DW talks to Mike Rivers, Product Director at GTT, about disrupting the established telecoms market, as the company seeks to help end users ensure that the network part of the overall IT stack, is an enabler rather than a bottleneck when it comes to digital transformation.

1. Please can you provide a brief background on the company?

GTT connects people across organisations, around the world and to every application in the cloud. We aim to deliver an outstanding experience to clients through our core values of simplicity, speed and agility. GTT operates a global network and provides a comprehensive suite of cloud networking services to any location in the world.

2. And what have been the major milestones to date?

Through a series of strategic mergers and acquisitions, we have developed a Tier 1 IP network, among the largest in the industry, with over 600 PoPs spanning six continents and service presence in more than 140 countries. In addition to building our global network footprint via M&A actvity, our acquisitions have deepened our product portfolio, enabling us to add clients, and to contribute world class sales talent. For example, our acquisition of Hibernia in 2017 added transatlantic fibre network to our portfolio. Last year’s acquisition of Interoute added significant scale, deepending our local presence throughout Europe and a pan European fibre network.

3. And how does GTT distinguish itself in what’s a very busy market?

Our opportunity lies in disrupting the established telecoms market place. As a company we don’t have legacy services to protect and we also have the flexibility and entrepreneurial spirit to adopt innovative new technologies to win business and gain market share. Our industry leading software defined wide area networking (SD-WAN) service is an example such innovation that brings substantial benefits to our clients in terms of improved network efficiency and application performance

GTT is also exclusively focused on the B2B market, which contrasts with many of the larger incumbent providers that have shifted attention away from serving the enterprise market towards consumer, mobile, and media content-related businesses.

Our global Tier 1 IP backbone also sets us apart. It securely connects client locations to any destination on the internet or to any cloud service provider. We offer the widest range of access options with bundled network security, making it simple and cost-effective to integrate new locations and add network bandwidth as needed.

Finally we strive to be a company that’s easy to do business with built on our core values of simplicity, speed and agility. We think this truly sets us apart, and it’s one of the many reasons that we’ve grown so rapidly.

4. Please can you provide a brief overview of the GTT portfolio?

GTT serves national, international and global enterprises, governments and universities, as well as the world’s largest telecoms operators and OTT providers with a portfolio of network services. These services broadly fall into two catagories.

The first is our managed services products which tend to focus on supporting enterprise clients. We offer a comprehensive suite of cloud networking services, including wide-area networking (SD-WAN, Ethernet, VPLS, MPLS), and Internet (IP transit, DIA, managed broadband). We also provide managed security and unifed communications (Cloud UC and SIP Trunking)

The second category is is our network services business, this is the transport and infrastructure (wavelength, dark fiber, Ethernet, colocation) play for large scale businesses such as carriers, government organisations and OTT household brands.

5. And the GTT managed services?

We deliver cloud networking services to multinational clients. The majority want a global network solution that can provide them with all of their connectivity needs so part of the value we can deliver is managed network services that support the full management of critical IT capabilities. Everything from network design, implementation, management and monitoring to gathering quotes from our access partners. This is typically something clients value as it removes complexity, and allows them to focus their scarce IT resources on core business requirements.

6. In more detail, can you talk us through the transport and infrastructure services?

We operate one of the largest, most advanced fibre networks in Europe connecting 21 countries. As part of our suite of cloud networking services, we sell high capacity wavelengths, to some of the largest cloud providers in the world. Ethernet is another technology that we frequently sell for cloud connectivity and low-latency, point-to-point connections. We also sell dark fibre and colocation.

7. And your WAN offering?

From VPLS, and MPLS to SD-WAN – we offer multiple flavors of WAN. However, the type of WAN that we offer to each client depends on individual need.

In the last few years, we’ve seen a significant uptake in SD-WAN, which is a point of differentiation for us. SD-WAN is a much more advanced and intelligent than traditionala networks. It leverages the GTT internet backbone at both the technical and the service level. SD-WAN combines the power of the internet with greater use of software so that the network can adapt to the demands being placed on it. Using capabilities like overlay networking, data analysis and analytics, we can offer clients agile methods to get traffic to each location and applications in a cost-efficient manner. SD-WAN, coupled with our Tier 1 global IP backbone, provides advantages for cloud application performance and simplifies cloud adoption.

8. And then there’s voice?

This is a classic networking product. We can provide a suite of unified coomunications services that offer global reach and improve productivity. . Whether businesses are migrating to Cloud UC or connecting voice infrastructure over SIP, GTT offers both. Our Voice services help organisations take advantage of the advanced functionality and cost efficiencies of cloud-based service delivery, such as hosted PBX solutions based on soft client technology, backed by the global reach of our SIP-based voice network.

9. And, finally, Internet?

GTT is one of the top five internet backbones in the world.

We provide a fast and reliable internet experience. We own and operate AS3257, one of the top ranked Tier 1 IP networks in the world, providing the scalability and network reach that organisations need to connect globally.

We reach roughly a third of all internet destinations and have direct connectivity with every major cloud provider sitting across the backbone. This provides a real advantage for our clients. It means we can provide performance guarantees of the connectivity for applications and also means that the cost base is lower than that offered by our competitors.

10. Focusing in on the WAN offering in more detail, how would you say that the SD-WAN market has developed up to now?

The SD-WAN market has seen a lot of developments in recent years, particularly with new entrants emerging from both the managed service providers and vendor ecosystems. For clients, the question is no longer “if” but “when”. However, because many businesses are still tied into legacy WAN contracts, not all enterprises can move to SD-WAN straight away. What we’re seeing is that these clients are exploring SD-WAN’s capabilities, although the early adoptors are already deploying SD-WAN for full production. Thanks to well developed relationships with over 300 local access partners and one of the largest global tier-one IP networks in the industry we’re well placed to help guide clients, so they benefit from the full potential of SD-WAN technologies.

11. For example, SD-WAN has a major role to play in a multi-cloud environment?

As enterprises increasingly adopt a multi-cloud approach then they will need a flexible, agile network. SD-WAN isn’t a static network environment like a traditional WAN. Instead, it has the ability to make decisions to benefit the performance of a specific application. Being able to route traffic over the best available path, businesses are equipped with the agility to optimise the network and prioritise mission-critical or latency-sensitive applications like video and voice.

12. More generally, smarter and faster networks are needed to respond to the demands of modern business?

Absolutely. The network is a key foundation and enabler of IT. Within the world of IT, there are three key areas:

1. Procesing data in an application

2. Storing data

3. Moving data between client and a server – or between applications

The movement of data is the area in which the network comes into play. Given the huge dispruption and changes to the way we process and store data, we need more dynamic networking environments that adapt to the needs of the applications.

13. Specifically, what networking changes can we expect to see in terms of both topologies and speeds in order to meet the demands of the digital world?